全部的 K8S学习笔记总目录,请点击查看。

helm 是 k8s 的包管理工具,类似于 yum/apt/homebrew。

Helm的安装和使用

认识Helm

Helm的重要概念有以下几个:

- chart,应用的信息集合,包括各种对象的配置模板、参数定义、依赖关系、文档说明等

- Repository,chart仓库,存储chart的地方,并且提供了一个该 Repository 的 Chart 包的清单文件以供查询。Helm 可以同时管理多个不同的 Repository。

- release, 当 chart 被安装到 kubernetes 集群,就生成了一个 release , 是 chart 的运行实例,代表了一个正在运行的应用

helm 是包管理工具,包就是指 chart,helm 能够:

- 从零创建chart

- 与仓库交互,拉取、保存、更新 chart

- 在kubernetes集群中安装、卸载 release

- 更新、回滚、测试 release

安装

下载最新的稳定版本:https://get.helm.sh/helm-v3.2.4-linux-amd64.tar.gz

1 | # k8s-master节点 |

入门实践1:使用helm安装wordpress应用

1 | # helm 搜索chart包 |

入门实践2:新建nginx的chart并安装

1 | $ helm create nginx |

Chart的模板语法及开发

接下来我们分析一下nginx的chart实现分析,然后总结出chart的开发规范。

Chart的目录结构

1 | $ tree nginx/ |

很明显,资源清单都在templates中,数据来源于values.yaml,安装的过程就是将模板与数据融合成k8s可识别的资源清单,然后部署到k8s环境中。

1 | # 查看模板渲染后的资源清单 |

分析模板文件的实现

引用命名模板并传递作用域

1 | {{ include "nginx.fullname" . }} |

include从_helpers.tpl中引用命名模板,并传递顶级作用域.

内置对象

1 | .Values |

Release:该对象描述了 release 本身的相关信息,它内部有几个对象:Release.Name:release 名称Release.Namespace:release 安装到的命名空间Release.IsUpgrade:如果当前操作是升级或回滚,则该值为 trueRelease.IsInstall:如果当前操作是安装,则将其设置为 trueRelease.Revision:release 的 revision 版本号,在安装的时候,值为1,每次升级或回滚都会增加Release.Service:渲染当前模板的服务,在 Helm 上,实际上该值始终为 Helm

Values:从values.yaml文件和用户提供的 values 文件传递到模板的 Values 值Chart:获取Chart.yaml文件的内容,该文件中的任何数据都可以访问,例如{{ .Chart.Name }}-{{ .Chart.Version}}可以渲染成mychart-0.1.0

模板定义

1 | {{- define "nginx.fullname" -}} |

示例

1 | apiVersion: v1 |

渲染完后是:

1 | apiVersion: v1 |

去掉空格

{{- }}去掉左边的空格及换行{{ -}}去掉右侧的空格及换行

管道及方法

trunc表示字符串截取,63作为参数传递给trunc方法,trimSuffix表示去掉

-后缀1

{{- .Values.fullnameOverride | trunc 63 | trimSuffix "-" }}

nindent表示前面的空格数

1

2

3selector:

matchLabels:

{{- include "nginx.selectorLabels" . | nindent 6 }}lower表示将内容小写,quote表示用双引号引起来

1

value: {{ include "mytpl" . | lower | quote }}

条件判断语句每个if对应一个end

1

2

3

4

5{{- if .Values.fullnameOverride }}

...

{{- else }}

...

{{- end }}通常用来根据values.yaml中定义的开关来控制模板中的显示:

1

2

3{{- if not .Values.autoscaling.enabled }}

replicas: {{ .Values.replicaCount }}

{{- end }}定义变量,模板中可以通过变量名字去引用

1

{{- $name := default .Chart.Name .Values.nameOverride }}

遍历values的数据

1

2

3

4{{- with .Values.nodeSelector }}

nodeSelector:

{{- toYaml . | nindent 8 }}

{{- end }}toYaml处理值中的转义及特殊字符, “kubernetes.io/role”=master , name=”value1,value2” 类似的情况

default设置默认值

1

image: "{{ .Values.image.repository }}:{{ .Values.image.tag | default .Chart.AppVersion }}"

更多语法参考:

https://helm.sh/docs/topics/charts/

Helm 使用

Helm template

hpa.yaml

1 | {{- if .Values.autoscaling.enabled }} |

赋值方式

创建Release的时候赋值

set的方式

1

2# 改变副本数和resource值

$ helm install nginx-2 ./nginx --set replicaCount=2 --set resources.limits.cpu=200m --set resources.limits.memory=256Mivalue文件的方式

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21$ cat nginx-values.yaml

resources:

limits:

cpu: 100m

memory: 128Mi

requests:

cpu: 100m

memory: 128Mi

autoscaling:

enabled: true

minReplicas: 1

maxReplicas: 3

targetCPUUtilizationPercentage: 80

ingress:

enabled: true

hosts:

- host: chart-example.test.com

paths:

- /

$ helm install -f nginx-values.yaml nginx-3 ./nginx

查看渲染后的资源清单

使用helm template查看渲染模板

1 | $ helm -n test template nginx ./nginx --set replicaCount=2 --set image.tag=alpine --set autoscaling.enabled=true |

实战:使用Helm部署Harbor镜像及chart仓库

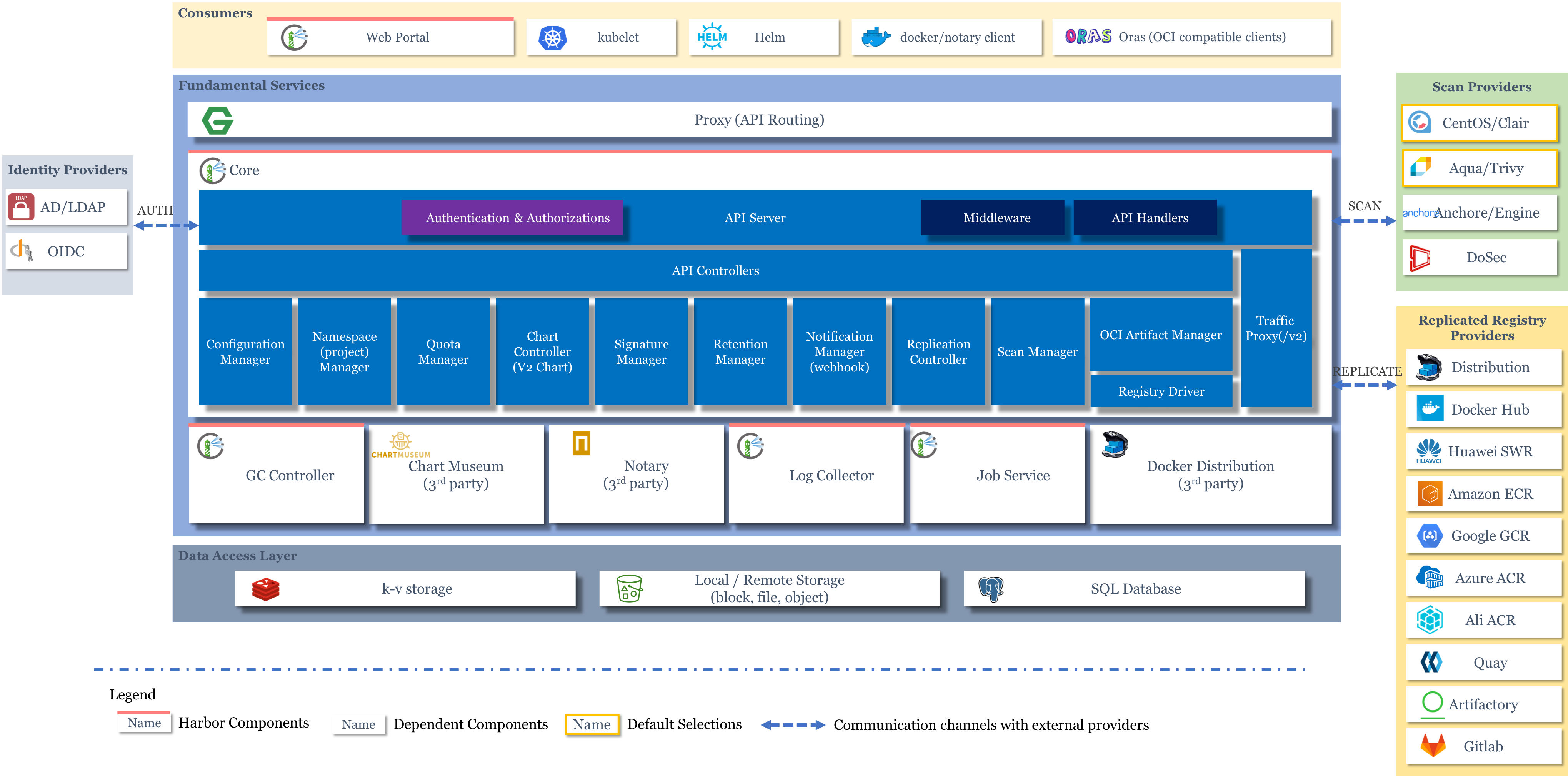

harbor架构

架构 https://github.com/goharbor/harbor/wiki/Architecture-Overview-of-Harbor

- Core,核心组件

- API Server,接收处理用户请求

- Config Manager :所有系统的配置,比如认证、邮件、证书配置等

- Project Manager:项目管理

- Quota Manager :配额管理

- Chart Controller:chart管理

- Replication Controller :镜像副本控制器,可以与不同类型的仓库实现镜像同步

- Distribution (docker registry)

- Docker Hub

- …

- Scan Manager :扫描管理,引入第三方组件,进行镜像安全扫描

- Registry Driver :镜像仓库驱动,目前使用docker registry

- Job Service,执行异步任务,如同步镜像信息

- Log Collector,统一日志收集器,收集各模块日志

- GC Controller

- Chart Museum,chart仓库服务,第三方

- Docker Registry,镜像仓库服务

- kv-storage,redis缓存服务,job service使用,存储job metadata

- local/remote storage,存储服务,比较镜像存储

- SQL Database,postgresl,存储用户、项目等元数据

通常用作企业级镜像仓库服务,实际功能强大很多。

组件众多,因此使用helm部署

准备repo

1 | # 添加harbor chart仓库 |

创建pvc

1 | $ kubectl create namespace harbor |

修改helm配置

修改harbor配置:

ingress访问的配置(36行和46行)

1

2

3

4

5

6

7

8

9

10

11

12

13ingress:

hosts:

core: core.harbor.domain

notary: notary.harbor.domain

# set to the type of ingress controller if it has specific requirements.

# leave as `default` for most ingress controllers.

# set to `gce` if using the GCE ingress controller

# set to `ncp` if using the NCP (NSX-T Container Plugin) ingress controller

# set to `alb` if using the ALB ingress controller

controller: default

## Allow .Capabilities.KubeVersion.Version to be overridden while creating ingress

kubeVersionOverride: ""

className: "nginx"externalURL,web访问入口,和ingress的域名相同(126行)

1

126 externalURL: https://harbor.test.com

持久化,使用PVC对接的nfs(215,220,225,227,249,251,258,260)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54204 persistence:

205 enabled: true

206 # Setting it to "keep" to avoid removing PVCs during a helm delete

207 # operation. Leaving it empty will delete PVCs after the chart deleted

208 # (this does not apply for PVCs that are created for internal database

209 # and redis components, i.e. they are never deleted automatically)

210 resourcePolicy: "keep"

211 persistentVolumeClaim:

212 registry:

213 # Use the existing PVC which must be created manually before bound,

214 # and specify the "subPath" if the PVC is shared with other components

215 existingClaim: "harbor-data"

216 # Specify the "storageClass" used to provision the volume. Or the default

217 # StorageClass will be used (the default).

218 # Set it to "-" to disable dynamic provisioning

219 storageClass: ""

220 subPath: "registry"

221 accessMode: ReadWriteOnce

222 size: 5Gi

223 annotations: {}

224 chartmuseum:

225 existingClaim: "harbor-data"

226 storageClass: ""

227 subPath: "chartmuseum"

228 accessMode: ReadWriteOnce

229 size: 5Gi

230 annotations: {}

246 # If external database is used, the following settings for database will

247 # be ignored

248 database:

249 existingClaim: "harbor-data"

250 storageClass: ""

251 subPath: "database"

252 accessMode: ReadWriteOnce

253 size: 1Gi

254 annotations: {}

255 # If external Redis is used, the following settings for Redis will

256 # be ignored

257 redis:

258 existingClaim: "harbor-data"

259 storageClass: ""

260 subPath: "redis"

261 accessMode: ReadWriteOnce

262 size: 1Gi

263 annotations: {}

264 trivy:

265 existingClaim: "harbor-data"

266 storageClass: ""

267 subPath: "trivy"

268 accessMode: ReadWriteOnce

269 size: 5Gi

270 annotations: {}管理员账户密码(382行)

1

382 harborAdminPassword: "Harbor12345!"

trivy、notary漏洞扫描组件,暂不启用(639,711行)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16637 trivy:

638 # enabled the flag to enable Trivy scanner

639 enabled: false

640 image:

641 # repository the repository for Trivy adapter image

642 repository: goharbor/trivy-adapter-photon

643 # tag the tag for Trivy adapter image

644 tag: v2.6.2

710 notary:

711 enabled: false

712 server:

713 # set the service account to be used, default if left empty

714 serviceAccountName: ""

715 # mount the service account token

716 automountServiceAccountToken: false

helm创建

1 | # 使用本地chart安装 |

推送镜像到Harbor仓库

配置hosts及docker非安全仓库:

1 | $ cat /etc/hosts |

推送chart到Harbor仓库

helm3默认没有安装helm push插件,需要手动安装。插件地址 https://github.com/chartmuseum/helm-push

安装插件

在线安装

1 | $ helm plugin install https://github.com/chartmuseum/helm-push |

离线安装

1 | $ mkdir helm-push |

添加repo

1 | $ helm repo add myharbor https://harbor.test.com/chartrepo/test |

推送chart到仓库:

1 | $ helm push harbor test --ca-file=harbor.ca.crt -u admin -p Harbor12345! |

实战:使用Helm部署NFS StorageClass

准备repo

1 | # 添加nfs-subdir-external-provisioner chart仓库 |

修改helm配置

这里我们之前创建了nfs服务器,服务器地址是192.168.100.1,共享目录是/,因此我们修改对应的配置即可。

1 | $ tar xf nfs-subdir-external-provisioner-4.0.18.tgz |

helm创建

1 | # 创建命名空间 |

确认StorageClass

1 | # 查看pod运行情况 |

实战:使用Helm部署Ingress Controller

准备repo

1 | # 添加ingress-nginx chart仓库 |

修改helm配置

1 | $ tar xf ingress-nginx-4.8.3.tgz |

helm创建

1 | # 给节点打标签,所有想要部署ingress-controller的节点都需要打上该标签 |

确认Ingress Controller

1 | # 查看pod运行情况 |